Vulnerability digging with CodeQL

Using CodeQL based variant analysis to find vulnerabilties

-

-

- Name

- Timo Müller

-

- Name

- Hans-Martin Münch

-

Introduction

CodeQL is the semantic code analysis engine from GitHub which can be used to discover vulnerabilities in different code bases. It allows us to Query code as if it were data, and helps to describe and identify insecure code patterns. Especially the ability to easily describe code flows from a source to sink, even if variables are reassigned or modified multiple times, is quite impressive. Over the last two years, several interesting blog posts and vulnerability reports have been published that impressively demonstrated the power of this approach.

We wanted to gain some practical experience with CodeQL and see how easy (or difficult) it is to identify vulnerabilities with it, ideally with real world examples. Since we have some experience with JMX, we decided to look at CVE-2016-3427 and its variants. This post first provides an overview of the vulnerability and then then describes how we created the CodeQL query to identify vulnerable code paths. It should give security researchers a general idea how to approach bughunting with CodeQL, but does not contain a introduction to CodeQL itself.

The vulnerability: CVE-2016-3427

CVE-2016-3427 references a deserialization vulnerability in the authorization process of Java JMX. It was originally reported to Oracle by Pierre Ernst. The vulnerability is caused by the fact, that the “RMIServer.newClient” method does not just accept a String (username/password), but arbitrary objects as method parameter. We assume that Oracles initial intend was to create a flexible interface that supports various authentication types.

If JMX is accessible over RMI, we can exploit this by creating a minimal client that sends our malicious serialized object to the RMI service. The following example uses the CommonsCollections6 gadget from Ysoserial:

String hostName = "192.168.1.11";

int registryPort = 1099;

Registry registry = LocateRegistry.getRegistry(hostName, registryPort);

Object gadget = new CommonsCollections6().getObject("touch /tmp/pwned");

RMIServer rmiServer = (RMIServer) registry.lookup("jmx-rmi");

RMIConnection rmiConnection = rmiServer.newClient(gadget);

rmiConnection.close();

When this vulnerability was published, we did not pay much attention to it as the underlying RMI protocol also uses serialized objects. Directly exploiting the deserialization issues in RMI itself provided a more general approach that still worked after Oracle patched CVE-2016-3427.

Oracles patch for CVE-2016-3427

Oracle patched this issue in JDK 8u91. The vulnerability was mitigated by installing a filter that by default only allows the usage of String objects. To quote the release notes:

A new java attribute has been defined for the environment to allow a JMX RMI JRMP server to specify a list of class names. These names correspond to the closure of class names that are expected by the server when deserializing credentials. For instance, if the expected credentials were a List, then the closure would constitute all the concrete classes that should be expected in the serial form of a list of Strings.

By default, this attribute is used only by the default agent with the following filter:

{ "[Ljava.lang.String;", "java.lang.String" }

To enable JMX support most applications use the standard JMX-RMI implementation that can be started/configured by passing addition command line arguments to the Java Runtime Environment. After updating to JDK8u91, these installations are no longer vulnerable, as the default implementation contains the described filter. However, there is also the case where the JMX-RMI service is created within the Java code itself. This is only done by a small number of applications, mostly as they need more control over the JMX service, for example if they want to support authentication of JMX clients against their own user database.

This special case is interesting for attackers as the fix requires that developers pass the attribute jmx.remote.rmi.server.credential.types as part of the environment to the JMX service. The JDK 8u91 release notes provide a minimal example:

Map<String, Object> env = new HashMap<>(1);

env.put (

"jmx.remote.rmi.server.credential.types",

new String[]{

String[].class.getName(),

String.class.getName()

}

);

JMXConnectorServer server = JMXConnectorServerFactory.newJMXConnectorServer(url, env, mbeanServer);

This is an example of missing the “fail savely” security-design pattern: It moves the burden of fixing the issue to the developer, which must be aware of the potential security issue.

With the release of Java 10, this approach is deprecated as Java 10 introducted support for CREDENTIALS_FILTER_PATTERN. The code is similar, however the filter pattern can be described in the same format used in ObjectInputFilter.Config.createFilter(java.lang.String).

Map<String, Object> env = new HashMap<>(1);

env.put(RMIConnectorServer.CREDENTIALS_FILTER_PATTERN, "java.lang.String;!*"); // Allow java.lang.String, deny the rest

JMXConnectorServer server = JMXConnectorServerFactory.newJMXConnectorServer(url, env, mbeanServer);

Vulnerability examples: CVE-2016-8735 (Apache Tomcat JmxRemoteLifecycleListener) and Apache Cassandra

An actual example for a custom implementation of a JMX service is Tomcats “JmxRemoteLifecycleListener”. This implementation allows administrators to configure a fixed port of the RMI listener, making it way easier to create/apply firewall-rules.

This was before the Java 10 release, so the Tomcat developers fixed this issue in this commit, by setting the “jmx.remote.rmi.server.credential.types” property.

Another example is Apache Cassandra, which provides it’s own JMX implementation to include Cassandras own auth subsystem. The Cassandra developers silently patched the issue in August 2020.

Building the CodeQL query

First of all, a big thanks to the CodeQL masters from GitHub for all the help with (query) issues. Without all the small (and sometimes big) hints and syntactic tips, this query probably would’ve been taken way longer to develop, and less efficient. Please note that we ourselves are at the early learning stages of effectively using CodeQL, so bear that in mind for the following query explanations. A lot in our approach and the final query can probably improved, and we’re always happy to do better. If you have any suggestions for improvements or feedback feel free to contact us.

Before we can start to write the actual query, we need to have a minimal test case that we can use to create a CodeQL database. We used a Maven based Java project with the following “test” code:

Map<String, Object> env = new HashMap<String, Object>();

env.put(RMIConnectorServer.CREDENTIALS_FILTER_PATTERN, "java.lang.String;!*");

JMXConnectorServerFactory.newJMXConnectorServer(null, env, null);

The CodeQL database was created manually through the CodeQL command line. The actual query development was mainly done with the official VS Code CodeQL workspace, as it was quite convenient to set up and run. This worked without any issues, as long as VS Code and the command line use the same CodeQL version.

We start by importing the CodeQL classes that we will need for this query:

import java

import semmle.code.java.dataflow.DataFlow

import semmle.code.java.Maps

semmle.code.java.Maps is a helper class that provides code to deal with Java Maps. CodeQL provides a large number of useful helper classes, which we didn’t know when we started. Our first query attempt therefore contained a lot of additional code to identify access to Java Maps instances which was actually not needed.

CodeQL data flows

The DataFlow classes allows you to map dataflows within CodeQL. This class provides you with functionality to map code paths from a source to a sink. It basically looks like this, we just need to to overwrite the isSource and isSink predicates:

class MyDataFlowConfiguration extends DataFlow::Configuration {

MyDataFlowConfiguration() { this = "MyDataFlowConfiguration" }

override predicate isSource(DataFlow::Node source) {

...

}

override predicate isSink(DataFlow::Node sink) {

...

}

...

Our source will be the HashMap that will be passed as a “env” to the JMXConnectorServer as second argument.

Map<String, Object> env = new HashMap<String, Object>();

The sink is HasMap that gets passed as second argument to JMXConnectorServerFactory.newJMXConnectorServer:

JMXConnectorServerFactory.newJMXConnectorServer(url, env, mbeanServer);

When we started to create the query, we first attemted to re-create a “classic” code-review approach that we would also follow in a manual code review:

- Find all the sources (HashMap initializations, like

env = new HashMap) - Identify which of these maps gets passed the sink (the call to

newJMXConnectorServer(...)) - Analyze the DataFlow and check if a secure filter is set or not. This requires us to check the value of the first argument for every

env.putcall. If theRMIConnectorServer.CREDENTIALS_FILTER_PATTERNproperty is set, the implementation is safe.

Unfortunately, this approach adds a lot complexity, as we will first identify the DataFlow and then have to confirm if it is save or insecure. Instead it is way easier to only map “secure” DataFlows by using the call to env.put(RMIConnectorServer.CREDENTIALS_FILTER_PATTERN, ???) as source. Luckily semmle.code.java.Maps already defines a MapPutCall, through which we can easily detect code were something is written into a map. We create a CodeQL predicate that does the following:

- Check if a key-value is written into a map. We can use

MapPutCallfor that - The

keyof the map needs to be the constantRMIConnectorServer.CREDENTIALS_FILTER_PATTERN, orjmx.remote.rmi.server.credentials.filter.pattern.

Here the code of the predicate:

// Pass in our source and check if it matches with our conditions

private predicate putsCredentialtypesKey(Expr qualifier) {

// Use the MapPutCall helper to track Map operations

exists(MapPutCall put |

// Check if a Map is populated with one of the following two keys

put.getKey().(CompileTimeConstantExpr).getStringValue() =

["jmx.remote.rmi.server.credentials.filter.pattern"]

or

put.getKey().(FieldAccess).getField().hasQualifiedName("CREDENTIALS_FILTER_PATTERN")

}

}

// [...]

The predicate basically evaluates to true or false (this is probably not technical correct, but easily understandable). Using the predicate keeps the code more clean, and helps us to easily add additional source checks if required. The code is later called from the isSource predicate of the DataFlow.

The predicate will identify calls like this:

env.put(RMIConnectorServer.CREDENTIALS_FILTER_PATTERN, "java.lang.String;!*"); // Allow java.lang.String, deny the rest

Using these calls as source for our DataFlow will simplify the final query for the following reasons:

- If a defined DataFlow exists, it is secure, we don’t have to verify that the flow is secure, or is not secure

- If the code base contains a call to JMXConnectorServer that is not also part of our secure DataFlows, we can assume that the initialization is not secure

Let’s go ahead with defining the sink. We want to identify maps that go as second argument into calls to JMXConnectorServerFactory.newJMXConnectorServer, therefore we first need to dentify calls for that method. Please note that the actual predicate is a bit longer, as there are actually multiple ways to create a RMI-based JMX service. As we want to keep the example simple, we did not include the full predicate here (you can have a look at the final CodeQL query if you want).

// Evaluates true if the given method matches our definitions (must be call to newJMXConnectorServer)

predicate isRmiOrJmxServerCreateMethod(Method method) {

// The method must have the name "newJMXConnectorServer"...

method.getName() = "newJMXConnectorServer" and

// ... and it must belong to "javax.management.remote.JMXConnectorServerFactory". (this reduces false-positives)

method.getDeclaringType().hasQualifiedName("javax.management.remote", "JMXConnectorServerFactory")

}

Here the DataFlow implementation that uses the previously defined predicates.

class SafeFlow extends DataFlow::Configuration {

SafeFlow() { this = "SafeFlow" }

override predicate isSource(DataFlow::Node source) {

putsCredentialtypesKey(source.asExpr())

}

/* We tell te DataFlow what we define as sink.

The sink must be the seca "newJMXConnectorServer" call, but also...

our defined sink must be the second parameter to the method call.

*/

override predicate isSink(DataFlow::Node sink) {

exists(Call c |

// Define our expectations for the method call (it must be newJMXConnectorServer)

isRmiOrJmxServerCreateMethod(c.getCallee())

|

// Define that our source must be the second parameter to our newJMXConnectorServer call

sink.asExpr() = c.getArgument(1)

)

}

}

The actual CodeQL query

As of now we only defined our conditions for a flow, but we haven’t queried anything yet. CodeQL uses a syntax similar to an SQL query with a select, where, from statement. The basic query introduction explains this quite well.

The select statement is the only major thing missing from our query. At first we define required variables (within from), and the where statement. It should be noted that CodeQL is evaluated from bottom up, so we’ll start with the select. Here we basically “gather” and display the results in variable c and envArg. Most of the magic is happening within the where clause. From top to bottom here’s what we’re doing:

isRmiOrJmxServerCreateMethod(c.getCallee())finds all calls tonewJMXConnectorServer. We simply call the predicate that we also used in our DataFlow sinkc.getArgument(1)gets all second arguments that are passed to tonewJMXConnectorServer. The result is assigned to our Expression variableenvArg.- Ignore all results which are part of the “safe” DataFlow. (The environment map which is used for the JMXConnectorServer contains the key

RMIConnectorServer.CREDENTIALS_FILTER_PATTERN)

from Call c, Expr envArg

where

(isRmiOrJmxServerCreateMethod(c.getCallee())) and

envArg = c.getArgument(1) and

not any(SafeFlow conf).hasFlowToExpr(envArg)

select c, getRmiResult(envArg), envArg, envArg.toString()

An observing reader might has noticed the getRmiResult(envArg) within the select clause. This function was defined in order to handle the edge case where a null environment is passed to newJMXConnectorServer. In this case we don’t really have a DataFlow with a Map sink. For this reason, we check if envArg is null and change the select output text accordingly (note the $@ string within the second defined results, as placeholder for envArg):

string getRmiResult(Expr e) {

// We got a Map so we have a source and a sink node

if e instanceof NullLiteral

then

result =

"RMI/JMX server initialized with a null environment. Missing type restriction in RMI authentication method exposes the application to deserialization attacks."

else

result =

"RMI/JMX server initialized with insecure environment $@, which never restricts accepted client objects to 'java.lang.String'. This exposes to deserialization attacks against the RMI authentication method."

}

So far we only query for one vulnerable server instantiation (leading to false-negatives), and we detect only one filter instantiation (leading to false-positives). Extending the query is easy, as only additional method/constructor calls, and additional environment key-value pairs need to be added. For this task the already existing predicates can be easily extended. The final query can be found here.

You might notice that the query does not actually check the value that gets written to the Map. This would require us to parse the provided filter String value within CodeQL. While this could be done, for example with regex expressions, it would significantly increase the complexity (we would be required to reimplement the filter parsing implemented in Java) and runtime (potentially undefined runtime complexity for regex expressions) of the query.

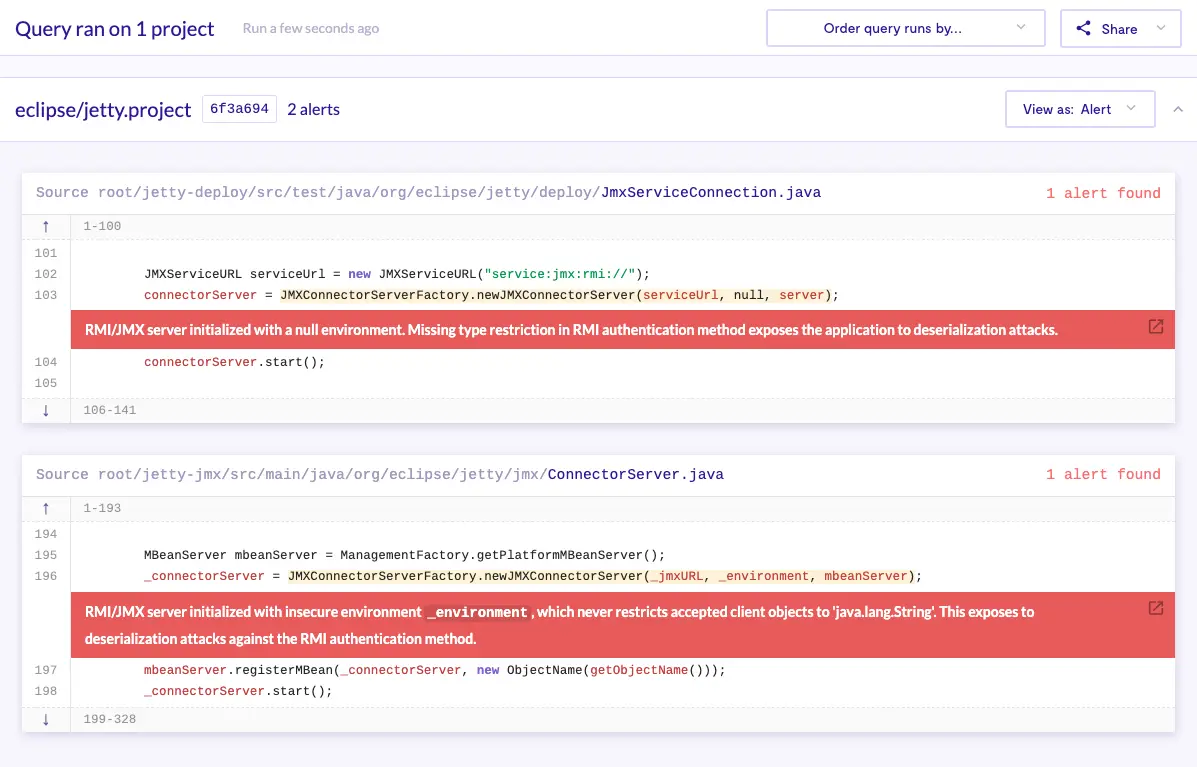

A test run of the query against Eclipse Jetty can be found within the interactive LGTM query console here, with the corresponding GitHub issue. Illustrated within the following screenshot we can see the overview of the LGTM query console.

Summary

The learning curve for CodeQL is quite steep, but will probably flatten slowly as more resources and documentation around the topic is created. The difference in required quality between prototyping and actually merging a pull request is immense. A quick-and-dirty CodeQL query can help to fasten up bug hunting, within a limited scope and a dedicated person sifting through false positives. However, (just assuming here) GitHub thinks in a way bigger scope (millions of repos), where execution time and false-positives need to be minimized. This raises the required effort from a proof-of-concept to a “working product” significantly. However, it was a pleasure to work on the project, and the GitHub team (and community) is very helpful in general. Also it feels great to provide a query that can now help to secure code repositories at scale. You can also receive a great payout from the bug bounty program for CodeQL queries.

Creating CodeQL queries differs a lot from your normal coding, therefore it takes some time to get used to it. In our opinion the documentation is often not very descriptive, and at least for us, this resulted in some trial and error every once in a while. GitHub provides a lot of learning content, for example CodeQL sessions by GitHub on YouTube, a learning lab, or CodeQL CTFs. If you want to get started with CodeQL, we recommend to go through “all” of it. Also, take some time and browse the documentation for the helper classes that are provided by CodeQL and the already existing CodeQL queries.

With a look in the future, DevOps teams will most try to employ a shift-left approach as much as possible, as it saves time and resources during development. Static tool analysis provide a convenient and powerful tool to integrate code scanning into the development process, and help to define a security base-level, detached from the skill of individuals. This will raise the bar for security vulnerabilities and reduce the amount of low hanging fruits to catch.

Thanks to Florencia Viadana on Unsplash for the title picture.